Wondering if someone can help me get to the bottom of what happens here occasionally. Let me give you a quick summary of what I have:

- Samsung 4K TV, capable of running 4K @ 60 or 2560x1440 @ 120 hz. Freesync.

- Laptop with RTX 2080 (desktop version of the card).

- Connected via HDMI.

I don’t like playing games at 4K @ 60hz because I’d rather have frames over resolution, especially at couch distance. I normally set the desktop resolution to 2560x1440 @ 120 HZ and just play everything in Fullscreen Borderless. Been happily using this setup since the holidays last year. Works great…

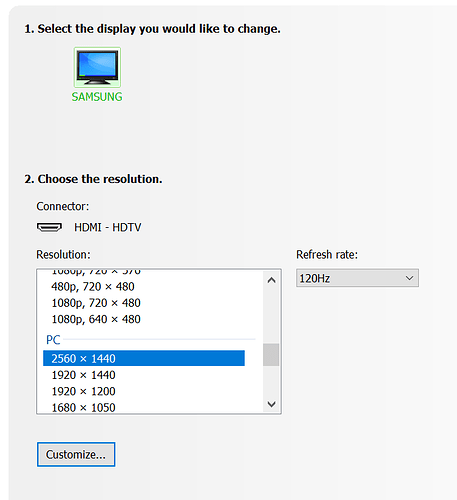

Except for something that has happened twice now. A few months back, I suddenly lost the 2560x1440 resolution. It just didn’t show up anymore as an option in either the Windows display properties or the Nvidia Control Panel. 4k @ 60hz was there, 1920x1080 was there, a whole lot of other resolutions… just not 2560x1440.

I did the usual Google searching and troubleshooting. I manually modified files to force the resolution to appear, tried creating Custom Resolution in NVC and that didn’t work. Uninstall and clean installation of drivers, rolled back to older drivers, nothing worked. The only thing I didn’t try was reinstalling Windows.

What ended up solving that situation was changing the HDMI port on the TV. I just moved it to a different one and BAM, the laptop immediately went to 2560x1440 @ 120hz when I booted it up. Weird, but whatever. It worked and I was done dealing with it.

It happened again yesterday, though. I had everything at 2560x1440 and left the computer for a while, where it eventually went to sleep mode. I wasn’t able to wake it via the keyboard so I just rebooted it. When it came back I knew something was off, as the color saturation was different (a lot warmer). Sure enough, it was set to 4K @ 60hz. This time, 2560x1440 DOES show up, but it’s only available in 30hz. Again, I tried troubleshooting for a while last night but nothing worked. Right now I just have it at 1920x1080 @ 120hz and I’m using that.

So… I figure I can change the HDMI port again, but eventually I’m going to run out of ports. I want to get to the bottom of this, because it’s really annoying. I believe these properties for the display for monitors/TVs are stored in Windows so a reinstall of Windows 10 might do the trick, but I want to leave that as a last resort.

I see via a Google search that I’m definitely not alone in having this problem, but most of the replies are either things I’ve tried and mentioned above, or people not understanding the issue and insisting the HDMI port must not support the now-vanished resolution, which clearly isn’t the case.

Anyone have to deal with this sort of thing before? To reiterate:

- My TV supports 2560x1440 @ 120hz.

- My GPU supports 2560x1440 @ 120hz.

- My HDMI cable and port supports 2560x1440 @ 120hz.