AFAIK Tom’s using Restream.io ? to stream simultaneously to Twitch and Youtube, but that’s about it. I’d guess Jason helped set him up.

Does anyone know how much Unity 3D’s editor will leverage multi-cores for some of their heavier operations? Like baking light maps and junk?

For streaming specifically, all your extra cores can be used to increase CPU encoding quality which is generally far better than GPU or capture card encoding.

The best value is a 1700 overclocked to match an 1800x or better, if you don’t mind doing that. But even stock it’s a lot better than the Intel for streaming.

GamersNexus is a very reputable hardware testing site:

Streaming on the 7700K can work. If it must be done, it could be – it just depends on a few items of varying import:

The streamer will have to sacrifice stream quality. This can be done by moving from “Faster” to “Veryfast” or “Ultrafast,” in one part.

The streamer may w ant to set process priority for OBS, or play with affinities. This begins exiting “works well out of box” and entering “enthusiast project” territory rather quickly, but will help. The downside is that there’s some for the streamer-side framerate.

More resource-intensive games will have more difficulty coping with process de-prioritization (or streaming in general), as resources get assigned to OBS rather than strictly to the game.

Lowering bit-rate, e.g. to 4Mbps, can help further lighten workload on the CPU, but does so at the cost of output quality.

In this way, Intel’s CPU has now become the “project car” product. AMD Ryzen started its life as a project car – the product you buy because you’re OK with being under the hood a few hours a day, just to get the thing running perfectly. Now, with Ryzen’s initial launch issues somewhat smoothed out (but not completely), the CPU is holding well in streaming performance with minimal out-of-box tweaks. To get the 7700K to hold performance, we need quality tweaks, overclocks, and other “under the hood” modifications.

http://media.gamersnexus.net/images/media/2017/CPUs/streaming/dirt-7700k-vs-1700-obs-10mbps-perf.png

http://www.gamersnexus.net/guides/2993-amd-1700-vs-intel-7700k-for-game-streaming/page-2

TLDR Ryzen 1700 is what you want for streaming unless you use a separate capture system.

Yep, if you aren’t willing to adjust any settings and are streaming 1080p at 60fps, the extra cores are worthwhile.

I haven’t run AMD since the release of the Core 2 Duo. That said, I did pick up a 1700X when I was putting together a PC for my son. I ran into some of the initial release BIOS / fickle RAM timing problems, and ended up giving him my i7- 6700k system, and have been running the AMD myself. SInce the initial BIOS updates resolved the mobo / memory oddities, it’s been great. I imagine the 6700 is faster at some things, but honestly, it’s not noticeable on the desktop, and a gaming is a moot point running a 1080 Ti.

I’m going to keep my eye on them as I want to probably get a new mobo/cpu next year.

I feel less iffy about it if people here are using them successfully

Ah, nevermind folks. Turns out it’s garbage.

Well played.

Are you kidding? This shit will kill in servers and there is CRAZY money in servers. I would buy AMD stock at this point!

I am running the 6 core Ryzen in 2k with a 1080ti and did not have any problems at all. Might not be the most optimal pairing but works for me. Hopefully the 2 extra cores will help me staff off the next update a few years.

Nah. The price difference between xeon and threadripper is largely meaningless on servers and intel will compete on price there anyway.

I don’t think that they have announced anything for the server chips yet so maybe it will be better, but threadripper is a large chip with a higher tdp and they run hot enough that AMD is recommending liquid cooling for them which is not only a cost/complexity thing but also physical density which can matter a lot.

It looks like the performance and price are promising at this point, but power/cooling things could easily stand in the way of wide adoption by larger organizations.

What kind of efficiency can this thing pull? How is its performance in terms of watts per computations?

Cause that’s generally what drives the big servers, isn’t it? How cheap it can push computational power?

The fact that they’re running so hot makes me question whether they’d have a place in a huge server rack.

Unless you just mean some stand alone server.

Well, efficiency is also huge factor for cloud operators, because you cut down on electricity bills for powering and cooling. The big operators will literally upgrade an entire data center to a newer CPU to do that just that.

Ya, that’s the use case I was talking about.

The real “money” in servers these days is in huge server farms that run the cloud services for places like Amazon and Google. And that’s all about efficiency in terms of being able to get as much oomph as possible out of every watt of electricity.

I’m kind of skeptical that these processors fit that role, because the fact they are so hot indicates they aren’t efficient in terms of power.

These aren’t server parts, they’re enthusiast ones. So they’re actually clocked higher than Epycs will be. Intel does exactly the same thing with Core X-series vs. Xeons.

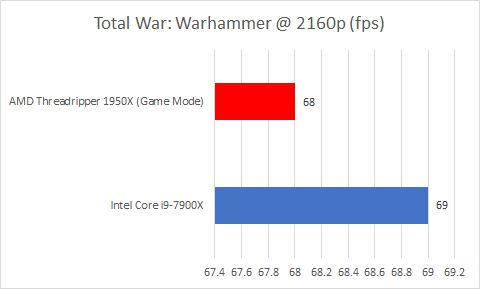

TR has absolutely no problems when compared to the Core i9s in power efficiency. The closest comparison in terms of price would be TR 1950X to Core i9-7900X. They’re both $1000 chips with almost the same clock frequency (3.4GHz for TR, 3.3GHz for the Core). TR has 60% more cores (16 cores vs. 10) and a TDP of just 30% higher (180W vs. 140W).

The other possible comparison would be to equalize the core counts rather than price. That would be the i9-7960X. In that comparison TR shows a <10% increase in power usage in trade for >20% increase in clock speeds.

It’s going to be a similar story on server. You’re actually not getting 32 Xeon cores from Intel with 170W TDP. It’ll have to be a dual socket server with CPUs that’d have a combined TDP of well over 200W. (The best comparison would probably be a single-socket Epyc 7551 vs. dual Xeon 2680v4. The same price for the CPUs, Xeons combine to have 4 fewer cores but have a bit more clock speed, so performance will be about the same. 170W vs. 240W).

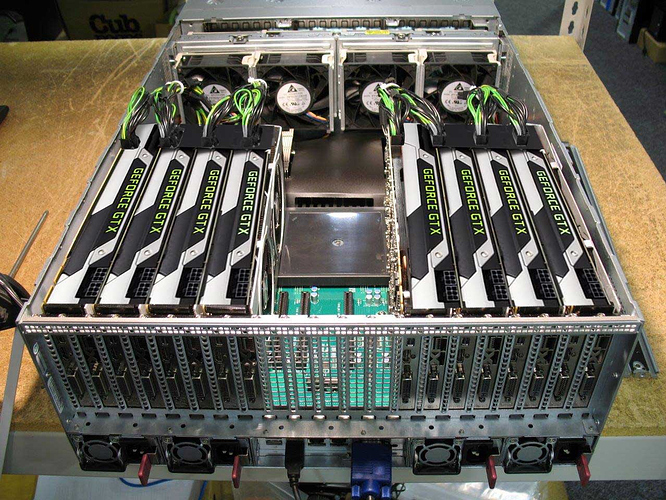

You do realize that that’s a 4U server, right?

So? That’s still going that have a higher power density than e.g. a 2U dual-socket (64 core) Epyc.

So? Even a 2U can support two threadrippers with ease. In answer to the question

To answer your question, no, people don’t generally rack iPhones in datacenters ;) But if they did, the efficiency would be literally off the charts.

People also don’t tend to rack Intel Atom boxes. 90w - 140w per CPU is pretty standard stuff, even in a 1U. And in a 2U you could easily put 250w+ CPUs in. Lots of cores is an insanely useful thing to have in a racked server. Hence… threadripper.