That’s the story on Zen 3, it looks to be extremely power efficient. IPC is unchanged, but it clocks much higher and presumably runs cooler.

Good point. nVidia continues to offer the option to be bilked out of $200 to pay the Gsync task. Maybe I’m crazy, I just prefer buying from companies that don’t act in a consistently unethical, anti-consumer and anti-competitive fashion.

Not for long. This change sounded the death knell for hardware gsync. Everything will be VESA VRR from now on.

We don’t know what the clocks were for the test chip, so we can’t conclude that IPC is unchanged. It could be that IPC is improved and clocks are slightly higher than the 9900k, or that clocks are a little lower than the 9900k and they have achieved massive improvements in IPC. We’ll see!

IPC could have improved, but it looked like a process shrink and clock increase from all the various articles.

It’s not really being bilked. Gsync is a superior tech and at the time it launched it was the only tech. A $200 premium on a display I only replace every 5+ years or so at most was well worth it to me.

EDIT: And by superior tech, I mean that Freesync doesn’t operate when frames drop below 48 FPS and that’s when I need it most.

It absolutely can do that, lots of cheap monitors simply didn’t implement VRR well. Freesync 2 is supposed to address that via certification.

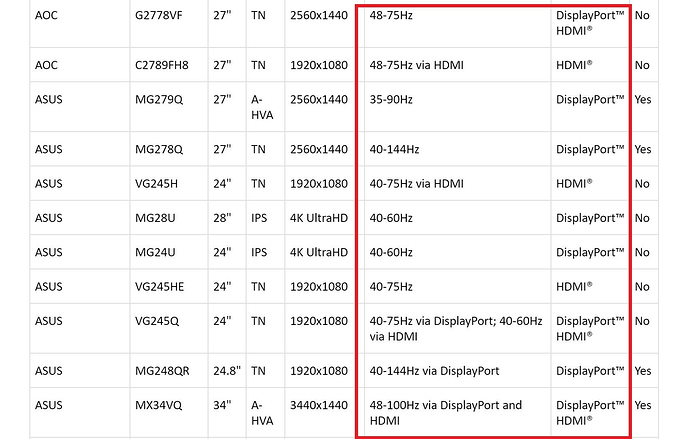

But not much better than 48, right? I mean this was right off of AMD’s website:

So in most cases, we’re talking 40, not 48. I feel like my point still stands that Nvidia wasn’t just “bilking” customers. They had a more expensive solution, but the dedicated hardware module was there for a reason. It just became untenable with the move into 4k and HDR, where the cost of the module neared $500. That much of a price gap was going to be market suicide, so here we are with a hopefully improved Freesync 2. Tech improves and moves on, but Gsync was still worth every penny for me.

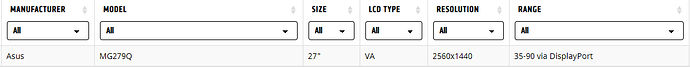

I don’t trust that screenshot, 'cause my Asus MG279Q monitor is not A-HVA, it’s IPS.

Blame AMD, not me. :) It’s a screenshot from an article posted last summer, but their website says it’s VA as of today.

Freesync 1 specs support 9Hz through 240Hz, but that’s just the possible range, it isn’t enforced.

Freesync 2 specification mandates that monitors must support 0Hz through their maximum refresh rate.

Also AMD has tech called “low framerate compensation” in Freesync 1, where when you’re on a Freesync monitor and you can’t generate the FPS to match its lowest variable refresh rate, it essentially repeats frames so the monitor thinks you are at 48fps or whatever, and VRR remains active. This has been in AMD drivers for 3 years now.

But from AMD’s own website, I can’t find a single monitor that supports anything like that 9hz. The absolute lowest I can find in the real world (i.e. not in theory) is 30FPS for the LG 27MK400H. Pretty much every monitor has a floor between 40-48hz.

I’m not trying to sell anyone on Gsync, I’m just pointing out that the hardware module was there for a reason, not just to bilk customers. I was done with Gsync going forward because $500 in order to get 4K/HDR was just untenable, and Nvidia clearly agrees. Tech moves on. :)

Yes, and that’s because they could get away with it. With Freesync 2 and Gsync certifications coming into play, you’ll have a badge that say “this monitor really works well with VRR”.

For sure. And I’m really looking forward to that, it’s a nice improvement in the display industry!

Incidentally, that’s exactly how Nvidia made non-Gsync certified Freesync monitors look like shit in their CES demo area. They ran at framerates below the bottom range of their VRR, and since Nvidia drivers don’t support that low framerate compensation stuff, they flickered.

If Nvidia holds fast to not supporting LFC, the vast majority of non-certified freesync monitors will be pretty disappointing on Nvidia hardware. Which seems like that’s how Nvidia wants it.

It sounds to me like you’re arguing people should pay an extra $200 for the privilege of playing games at 9fps… Maybe spend the extra money on a GPU that is capable of frame rates above 40fps?

I… what? I’m confused. I’m not sure if that’s a joke that went over my head or you completely misunderstood what I was saying (likely due to poor wording on my part, if so!).

What I was saying is that, depending on the monitor, Freesync doesn’t work below 48/40/whatever FPS. It’s those framerate hiccups where performance drops into that range like where I feel like techs like Gsync/Freesync have the most value, they greatly smooth out the display during dips in performance like that. You don’t get nasty hitches/stutters/tears and instead it moves along smoothly. Even on top-end hardware, you will have framerates dip momentarily.

Put another way, I don’t care about gsync/freesync when I’m at a steady 60fps because it’s not doing anything. It’s when it gets a patch of 30-40 FPS (or worse) that I want things smoothed out and Gsync does that reliably. It sounds like Freesync 2 will as well, so that’s a great improvement that eliminates the need for a Gsync module.

Is the Ryzen 3 generation expected to be immune to meltdown and Spectre type exploits by any chance?

Meltdown is intel-only, so yeah.

Spectre won’t be eliminated for years. Unknown if it has hardware mitigations.

It’s slightly worse than that. IF you sort AMD’s list by range it looks like only a handful of monitors support less than 48hz. 48Hz seems to be the main floor.