jummm. Interesting, thanks for the link!

In some ways I have to agree with the OP, but on the other hand I’ve already upgraded, kind of.

My 27" standard def TV was on its last legs, so I went out and picked up a cheap 32" Toshiba HD-Ready monitor for reasonably cheap. What really prompted me to go HD over a replacement SD was that I already had an HD cable box in the form of the DVR I already pay for through my cable service. Which meant I could buy HD-Ready instead of HDTV and I wouldn’t need a new cable box or DVR. The only purchasing snowball effect I’ve experienced was buying an upscaling DVD player. I don’t plan on replacing my DVD collection. Hell, I still haven’t replaced my VHS collection, I’m a buy once kind of guy.

So, if I couldn’t have done it under just $1k total (including the DVD player, wall mount, all the necessary cables, etc) and no extra recurring costs, I probably wouldn’t have. The problem with HD IMO at the moment is the snowball effect which can cause some people to end up spending twice as much or more than planned.

“Less than 50%”? Technically correct, I supopse, in that 10% is less than 50%. It would be staggering if HD managed to achieve 50% penetration already, given that the first ATSC-compliant sets didn’t go on sale until 1998 and it took color TV nearly twenty years to cross the 50% mark.

I suspect, though, that the sales volume has already exceeded the 50% threshold.

The 10% in that article referred to “current subscription rate for HD programming … among all digital video subscribers”.

According to this news blip, 1 in 6 U.S. households already owns an HD-capable set.

Anyhow, good for you that the Wii caters to non-HD gaming.

DOOM was actually 320x200!

I finally advanced a baby-step towards understanding the naysayers who don’t really think the difference is that meaningful – over the holidays, I was over at my in-laws and they had a Toshiba HDTV set and even I couldn’t really tell the difference between SDTV, which made me think (a) some “HDTVs” are so crappy they’re incapable of demonstrating the difference; or (b) father-in-law’s TV must have been set up incorrectly, using composite cables so it wasn’t properly showing HD content – tried to check that, but it was buried into a wall unit and he looked nervous with me poking around, so I figured it wasn’t worth the bother.

But on a good Panasonic/Pioneer Plasma, or a Sony/Sharp LCD, the difference between HDTV and SDTV is as stark as the difference between colour and black and white. The only thing “subjective” is whether or not you value that difference enough to upgrade if you haven’t already.

I’ll admit it: I’m a videophile. I like tuning as much image quality as I can out of my equipment. Once I went 1080p, and started viewing the glory that is PC gaming at 1920x1080 on a 42" LCD, there was no turning back. Think Rainbow Six Vegas looks good on the 360? Even if you set your 360 to 1080p, all it’s doing is scaling up the image, not changing the resolution the game runs in. Anyway, I had a couple friends over and they were skeptical, so I fired up the 360 version first (set to 1080p) then ran the PC version (1920x1080, 2x AA, 8x AF) and swapped between the two using the input select. They were absolutely stunned. There is no comparison.

This is why I buy PC versions of games if they’re available. Not only are they cheaper, but they run better on my setup than their console counterparts, and they look amazing on the bigscreen. Plus, I get mouse and keyboard control: This plus This plus This equals gaming nirvana.

I think in some respect you are right with (a). In stores, some of the lower end models do look pretty crappy to me. I don’t know what cabling they have for those, but I assume (which is probably a bad idea in this case) that it is the same as the higher end models – be it component or whatever. But, on those the colors seem muted and there is a high degree of artifacting during fast motion on screen.

But, I must say I am more then happy with my Toshiba HD-ready TV. The color isn’t nearly as crisp as a Sony/Sharp/Samsung model, but in my mind its easily recognizably better than SD and for the price I couldn’t be happier. Of course, I have everything running though component and HDMI (and s-video for xbox/ps2), so I am inclined to think cabling might be an issue with your in-laws.

It’s all very confusing. What resolution do Cox and DirectTV send out HD? Is it 720p, 1080i or what?

Both, if I’m not mistaken.

Yeah, resolution is determined by the network doing the broadcasting. Some use 1080i, others prefer 720p. Cable and sat providers, while they may recompress the feed, do not usually change the native resolution. I think the set top boxes they use usually can scale the signal to whatever your TV’s best accepts.

I can see how it seems confusing, but there really aren’t that many factors at play here.

To break it down:

HD feeds are going to come in as either 720p or 1080i. Any set should be able to handle either signal and scale it appropriately. If you have a fancy new 1080p set then a 1080i signal will not need to be downscaled.

Sets with a tuner can be plugged into an antenna to watch TV. Without a tuner you need an external box (such as a cable box or a PVR device that has a tuner). Tuners really are ONLY useful if you need to watch broadcast television with an antenna. Cable and satellite companies will all provide a settop box if you use their service.

Connections:

Composite - 480i

S-Video - 480i

Component - 480i, 480p, 720p, 1080i, 1080p

VGA - 480i, 480p, 720p, 1080i, 1080p, (plus computer resolutions)

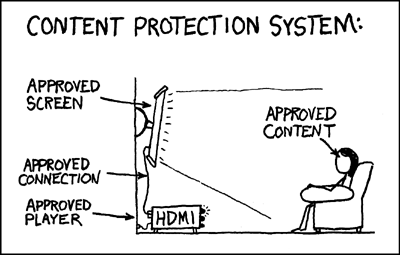

DVI/HDMI - 480i, 480p, 720p, 1080i, 1080p, (plus computer resolutions)

Sources:

Standard TV - 480i

Wii, PS2 - 480i, 480p* (with component cables)

DVDs - 480i, 480p

HD TV - 720p, 1080i

XBox - 480i, 480p*, 720p*, 1080i* (with component cables)

HDDVD/Bluray - 1080p (although players usually can output anything)

XBox360/PS3 - 480i, 480p, 720p, 1080i, 1080p

PC - Anything

The only thing I’d like to add to Reldan’s excellent summation is this: If you have an LCD, it will have a native resolution that it up/downscales everything to anyway. My Vizio 32" has a native 720p, so anything that I plug into it (be it DVD, PS2, set-top box, whatever) scales to that.

So in a lot of ways, worrying about the input resolution doesn’t really matter.

It could matter if your scaler isn’t good.

That’s true, but most modern LCDs have pretty good scalers.

Scaling is the lifeblood of a fixed-pixel displays. It isn’t just LCDs, but every HD set has a native resolution that the input signal will be scaled to. The only time you usually run into issues is when you try and watch 480i television, because it takes a lot of effort to take those 240 lines of resolution and blow them up into 720 or 1080 lines without it looking like shite.

Even HD CRT sets usually only handle at most 540p and 1080i (they scale 720p signals down to 540p), although they do a great job of handling 480i since they can pretty much output that without needing to do anything special. It’s a shame manufacturers are turning their backs on this technology.

Yeah the games are cheaper but the hardware you need to push 1080p at a reasonable framerate costs a lot more than a console and has to be updated more often. Add in no friends lists, no built in voip, no achievements, driver issues, etc. and the console has quite a few advantages.

Assuming that the PC has become an essential part of household living, the cost difference between a functional PC and a gaming PC that can push 1080p is about the cost of a PS3.

At CES this month BD/HD-DVD combo players and “Total HD” combo discs that include both formats are being announced so that at least should help deal with some of the format-wars annoyance…