I loved Flickr.

On a smaller scale, so did Yahoo’s multiplayer parlor games.

Guess what we did to those?

Ahh yes, I remember reading about that years ago. Old ladies playing mahjongg and whatnot online. They weren’t ironically bearded millenials, so I guess Mayer shitcanned that service too.

Moral to this story is to value your users, no matter who they are.

My understanding is that a lot of this came about because of child porn and grooming.

So those users they didn’t want. But rather than do things rationally, they decided to just burn it all to the ground and use shitty algorithms that detect literally everything as porn or something.

I’m not sure at what level the call was made, but I think the final decision there was that all Yahoo pages had to be made SSL compliant, and nobody wanted to devote the engineering resources to retrofit the parlor games. There was a brief, abortive effort to develop a new suite of parlor games, but it was problematic (trying to jump on the micropayments bandwagon, to begin with), and killed internally amid some bad politics. I felt bad about it, though – the engineer put in charge of that reboot is a really cool guy. Not sure what he’s doing now.

That reminds me of that Silicon Valley episode where they got a bunch of students to look at dick pics to help refine an algorithm, or something.

FTFY.

What is My Brother, My Brother and Me?

An advice show for the modren era.

Modern. Also, we in the know call it muhbambam.

Over-under on Yahoo/Oath properties left alive in 3 years? 0? Okay, maybe yahoo.com email addresses will continue to work… Verizon must surely understand that this whole purchase was totally worthless by now.

According to the analysts, Verizon purchased Yahoo for their advertising tech. So that’ll last, at least.

Mayer’s Yahoo webmail is also quite good, although very few people switched from gmail.

Well said.

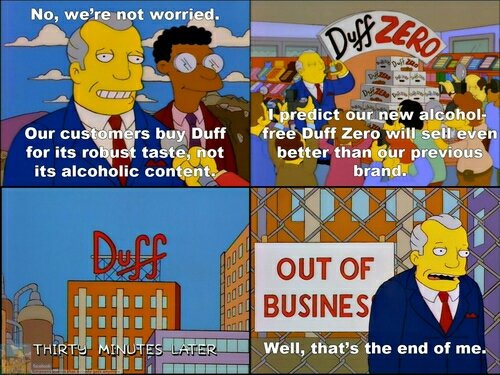

I am still surprised almost weekly at how little value is put on actual users & customers by Tech companies here in the bay area. It maybe elsewhere but I see it first hand here.

Its like they want some unicorn user instead of the actual users they are getting.

Its just bizarre the way companies will just toss entire communities in the trash can if they don’t meet their ever changing internal criteria for what their business should be about.

Getting users who stick around is no trivial thing, they should treat em like gold,

They only care about growth. Not profits, not revenue, not users. And not organic growth, either. Massive, overwhelming, growth. That feeling is endemic in silicon valley.

My one-time manager and department head at a major Bay Area biotech company fucking worships Marissa Mayer and Sheryl Sandberg, because she aspires to be a celebrity executive/self-help philosopher herself and therefore they can do no wrong.

I mentioned once that Marissa Mayer had drawn criticism for having a mother’s room built behind her office while banning her employees with kids from telecommuting, and this person immediately corrected me: “Marissa,” because I shouldn’t her the last name as though there were other Marissas, and then she exclaimed, “Yeah, she’s great!” as though I’d said something positive.

From the sounds of it child porn was out of control so I guess the best solution is to burn it down, all down.

From Reddit :

https://www.reddit.com/r/OutOfTheLoop/comments/a2r4h4/whats_the_deal_with_tumblr_banning_all_nsfw

Tumblr management is incompetent.

When they remove child porn images, they tend to leave the original posts and their accompanied notes intact. So, basically, they’re leaving up lots of these “Hey, we removed illegal material that was here, but please click below to see 200 more blogs that might have what you’re interested in”. They are essentially accomplices to distribution of child porn.

The child porn pictures often get reblogged over a thousand times before they are removed, which can take a week or even months. There are a lot of child porn images that have been on the platform for several months, even though numerous users (but not all of them!) reblogging the illegal image have been banned. Even worse, banned users just recreate their accounts, sometimes incrementing a number in end of the account name. Those numbers eventually reach double digits, they just keep coming back.

There are also “beacon” images that don’t get removed at all, since they only contain text in the image such as “reblog if you want to have sex with children”. Using these, pedophiles can easily find hundreds of other pedophile blogs and reconnect back to the grid after they are forced to recreate their account. Some of the beacons have existed for several years.

The pedophiles on tumblr are fearless. There are people taking selfies of themselves, showing their face, and then showing them jacking off to child porn that’s on their computer screen. There are people openly trading their phone numbers to connect in Whatsapp to trade images there, and although the international numbers I’ve seen have mostly been China, Vietnam, Mexico, Brazil, and other similar places a bit further away from the western civilization, there are users from Europe and USA posting their phonenumbers on those beacon posts. There are posts about “pedo pride” and the whole thing is outright crazy. There are thousands of active pedophiles on tumblr. THOUSANDS.

And what does Tumblr administration do? They nuke all the NSFW. I’m not entirely sure whether this will truly eradicate the child porn from Tumblr, considering the nature of the tumblr pedophile community. We’ll see.

What they should’ve done? First of all, they should’ve made reporting cleaner. There’s no obvious way to find the reporting functionality at all if all you do on tumblr is following others. Their help center has zero results for “child porn” or “illegal”, and when you finally find the help page for reporting content, it informs you that you need to click the “SHARE” button on the child porn images to report illegal content. Not kidding. Who the hell designed this system? I don’t know about you, but I’m sure as hell NOT clicking SHARE on any child porn image, even when I know it opens a popup where I can choose to report it.

Second, they should delete deeper. Most importantly, they should delete the posts when they delete the associated images. Pedophiles are delighted to see empty frames of pictures that have been removed for violating rules, because it allows them to find new blogs that reblogged the illegal content in question. Many of those blogs are still going to be completely intact. It’s also not uncommon to see child porn images with several thousand notes and almost everyone involved with it banned, but the picture intact. Seriously, if half of the users reblogging a certain image are already banned, maybe they need to review that image to see if it has to be deleted.

Third, they should ban people who hit “like” on illegal images. A large number of pedophiles do not reblog anything, they instead hit the like-button which keeps their content a bit more “stealth” from the masses. Other pedophiles can still find the material by browsing the liked-by lists. Apparently accounts that like illegal content won’t get banned that easily, either, which is utterly ridiculous.

There are many other things they could do, from verifying users with SMS to building better tools for exploring the site (to bring the things that hide in darkness into the light). All of the effective measures, however, require quite a bunch of work. Banning NSFW is just a policy change and isn’t expensive to enforce, although it might be expensive in terms of users lost.

PS. Oops, ended up writing a wall of text. I’ve been meaning to write a larger article about this situation for a while now, but if this move ends up killing the child porn communities on tumblr then maybe I won’t have to. I have so much to say about this, but ultimately it stems up to a single thing: Tumblr management is incompetent and grossly negligent in handling content removal.